Flows

What are Flows?

Section titled “What are Flows?”Flows are the core automation units in flowctl. A flow is a sequence of actions that execute in order, with support for inputs, variables, approvals. Flows are defined using YAML/HUML files and can run locally or on remote nodes.

Flow Structure

Section titled “Flow Structure”Every flow consists of four main sections:

- Metadata - Flow identification and configuration

- Inputs - Parameters that users provide when triggering the flow

- Actions - The actual tasks to execute

Basic Flow Example

Section titled “Basic Flow Example”Here’s a simple flow that greets a user:

metadata: id: hello_world name: Hello World description: A simple greeting flow

inputs: - name: username type: string label: Username description: Your name required: true validation: len(username) > 0

actions: - id: greet name: Greet User executor: docker variables: - username: "{{ inputs.username }}" with: image: docker.io/alpine script: | echo "Hello, $username!" echo "message=Welcome!" >> $FC_OUTPUTMetadata

Section titled “Metadata”The metadata section defines the flow’s identity and behavior:

metadata: id: my_flow # Unique identifier (alphanumeric + underscore) name: My Flow # Human-readable name description: Flow description namespace: default # Namespace for organization schedules: # Optional: cron schedules - "0 0 * * *" # Daily at midnight - "*/5 * * * *" # Every 5 minutesScheduling Flows

Section titled “Scheduling Flows”Flows can be scheduled using cron expressions.

inputs: - name: environment type: string default: "production" # Required for scheduled flows

metadata: schedules: - "0 2 * * *" # Run daily at 2 AMInputs

Section titled “Inputs”Inputs define parameters that users provide when triggering a flow. Flowctl supports multiple input types with validation.

Input Types

Section titled “Input Types”inputs: - name: namespace type: string label: Namespace description: Target namespace required: true default: "default" validation: len(namespace) > 3inputs: - name: count type: number label: Retry Count description: Number of retries required: true validation: count > 0 && count < 10inputs: - name: environment type: select label: Environment description: Deployment environment options: - development - staging - production required: trueinputs: - name: enable_debug type: checkbox label: Enable Debug Mode description: Enable verbose logging default: falseinputs: - name: api_token type: password label: API Token description: Authentication token required: trueInput Validation

Section titled “Input Validation”Use the validation field with expressions to validate input values:

inputs: - name: port type: number validation: port >= 1024 && port <= 65535

- name: username type: string validation: len(username) >= 3 && len(username) <= 20Actions

Section titled “Actions”Actions are the executable steps in a flow. Each action runs sequentially unless it fails.

Action Structure

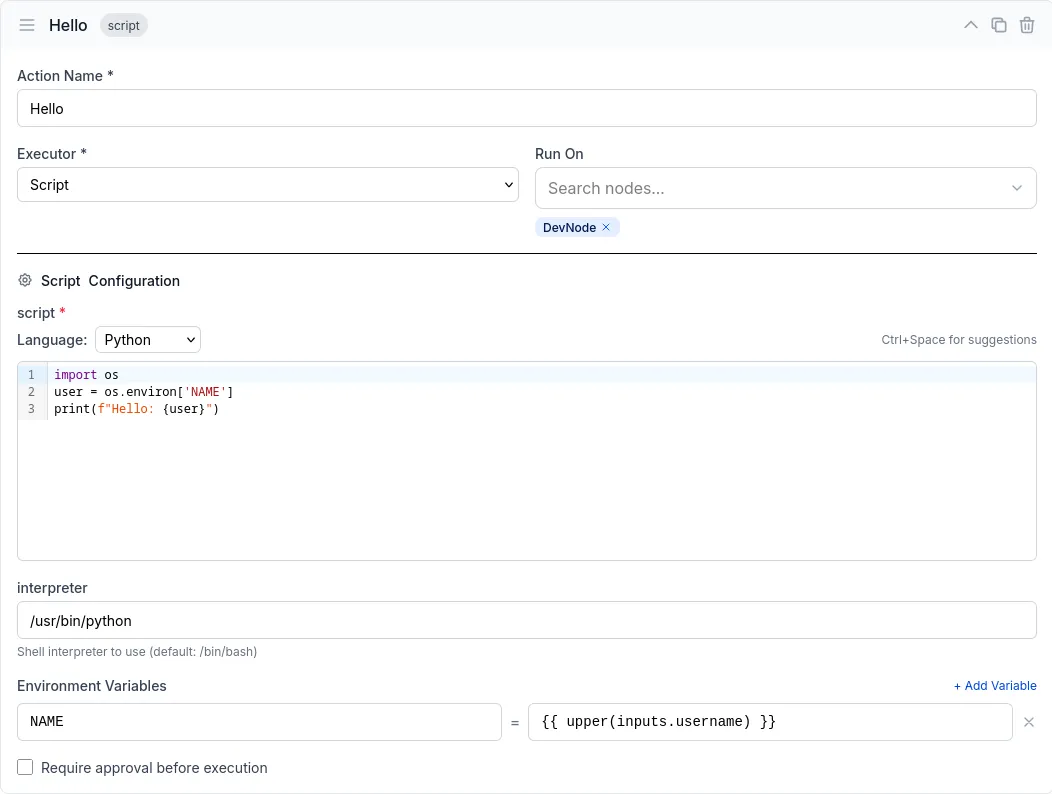

Section titled “Action Structure”actions: - id: action_id # Unique action identifier name: Action Name # Display name executor: docker # Executor type: docker or script on: # Optional: remote nodes to run on - NodeName1 - NodeName2 variables: # Variables available to the script - var_name: "{{ expression }}" with: # Executor-specific configuration image: alpine script: | echo "Script here" approval: false # Require manual approvalExecutors

Section titled “Executors”Flowctl supports two executor types:

Docker Executor

Section titled “Docker Executor”Runs scripts in Docker containers:

- id: build name: Build Application executor: docker variables: - environment: "{{ inputs.env }}" with: image: docker.io/node:18 script: | npm install npm run build echo "BUILD_ID=$(date +%s)" >> $FC_OUTPUTScript Executor

Section titled “Script Executor”Executes scripts directly on the target system (local or remote):

- id: deploy name: Deploy to Server executor: script on: - ProductionServer variables: - app_name: "{{ inputs.app_name }}" with: script: | cd /opt/$app_name git pull systemctl restart $app_nameVariables

Section titled “Variables”Variables are defined per-action and can reference inputs, secrets, or previous action outputs:

variables: # From inputs - username: "{{ inputs.username }}"

# From secrets - api_key: "{{ secrets.API_KEY }}"

# From previous action outputs - build_id: "{{ outputs.BUILD_ID }}"

# Using expressions - uppercase_name: "{{ upper(inputs.name) }}" - sum: "{{ inputs.num1 + inputs.num2 }}" - is_prod: "{{ inputs.env == 'production' }}"Flow Secrets

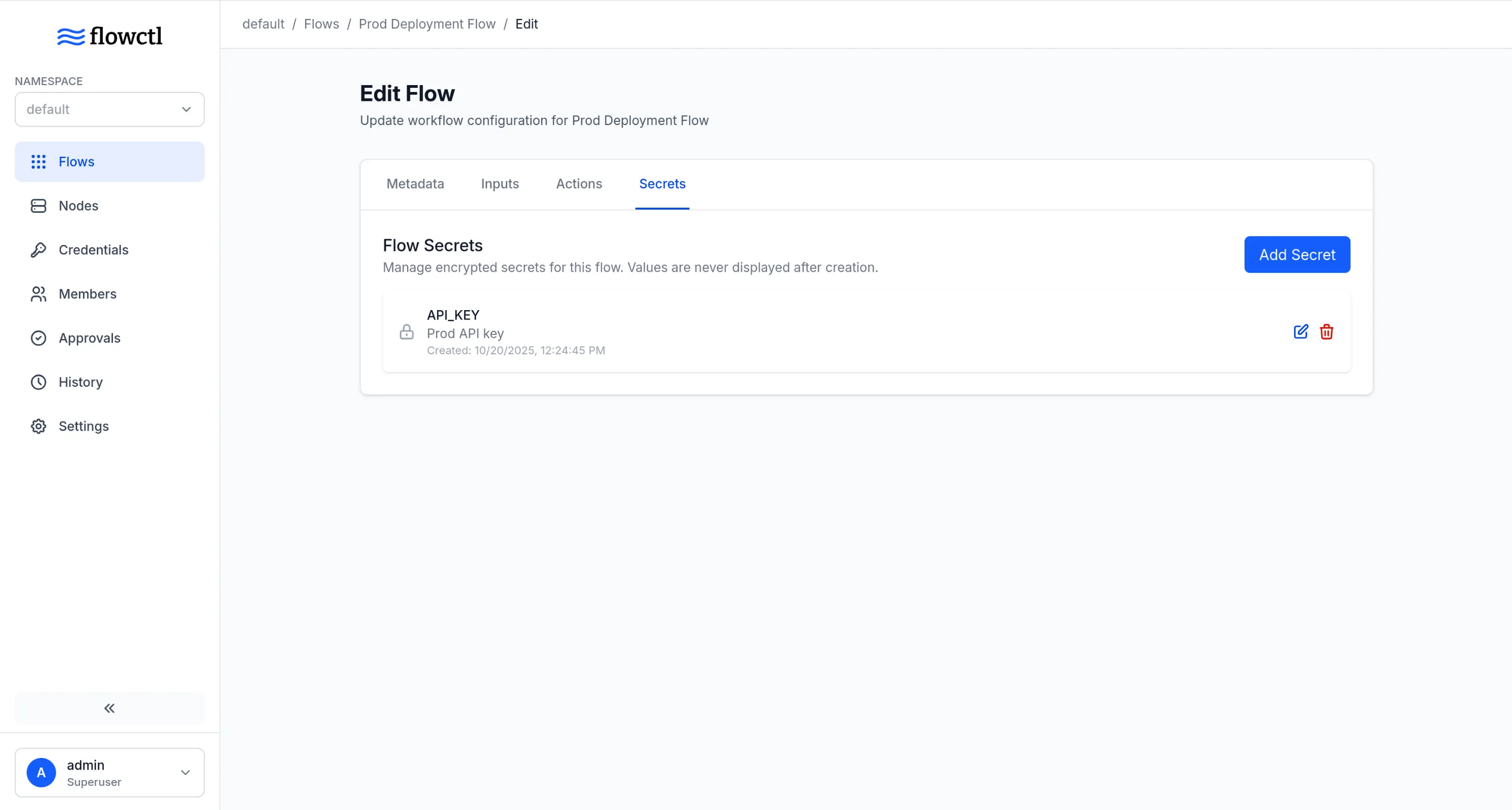

Section titled “Flow Secrets”Flow secrets allow you to securely store sensitive information like API tokens, passwords, and credentials that your flow needs to access. Secrets are encrypted at rest and never displayed after creation.

Managing Secrets

Section titled “Managing Secrets”Secrets are managed per-flow through the flowctl UI:

- Navigate to your flow’s configuration

- Go to the “Secrets” tab

- Add, edit, or delete secrets as needed

Secrets can be only added after the flow is created.

Each secret has:

- Key: The name used to reference the secret (e.g.,

API_TOKEN,DB_PASSWORD) - Value: The sensitive data (encrypted and never displayed after creation)

- Description: Optional note about what the secret is for

Using Secrets in Flows

Section titled “Using Secrets in Flows”Access secrets in your flow using the secrets context within variables:

actions: - id: deploy name: Deploy Application executor: docker variables: - PGPASSWORD: "{{ secrets.DB_PASSWORD }}" with: image: alpine script: | # Secrets are available as environment variables echo "Connecting to database..." psql -U postgres -c "SELECT 1"Approvals

Section titled “Approvals”Require manual approval before an action executes:

- id: deploy_production name: Deploy to Production executor: docker approval: true # Flow pauses here for approval with: image: alpine script: | echo "Deploying to production..."When a flow reaches an approval action, it pauses and waits for a user to approve or reject it through the UI. Only users with Admin or Reviewer role can approve requests.

Artifacts

Section titled “Artifacts”Preserve files generated during action execution:

- id: generate_report name: Generate Report executor: docker with: image: alpine script: | mkdir /reports echo "Report content" > $FC_ARTIFACTS/report.txtAny files copied to the $FC_ARTIFACTS directory will be available as artifacts in subsequent actions under the same directory.

Artifacts from remote nodes are automatically transferred and made available to subsequent actions at $FC_ARTIFACTS/<NodeName>/path/to/artifact. If the execution was local, the <NodeName> is empty.

Example: Using artifacts across nodes

actions: - id: create_on_remote name: Create File on Remote executor: docker on: - RemoteNode with: image: alpine script: | echo "Hello from remote" > $FC_ARTIFACTS/message.txt

- id: use_on_local name: Use File Locally executor: docker with: image: alpine script: | # Access artifact from RemoteNode cat $FC_ARTIFACTS/RemoteNode/message.txtRemote Execution

Section titled “Remote Execution”Execute actions on remote nodes using the on field:

- id: remote_task name: Run on Remote Server executor: script on: - WebServer1 - WebServer2 with: script: | hostname uptimeThe action runs on all specified nodes in parallel.

Actions can write output variables using the $FC_OUTPUT file:

script: | echo "KEY=value" >> $FC_OUTPUT echo "RESULT=success" >> $FC_OUTPUTAccess these in outputs or subsequent actions:

variables: - key_value: "{{ outputs.KEY }}"If the output is from a remote node, access it as:

variables: - key_value: "{{ outputs.RemoteNodeName.KEY }}"Next Steps

Section titled “Next Steps”- Configure Remote Nodes

- Learn about Executors

- Explore Access Control